Multi-Camera Parallel Tracking and Mapping in Snow-Laden Environments

Multi-Camera Parallel Tracking and Mapping in Snow-Laden Environments

Multi-Camera Parallel Tracking and Mapping in Snow-Laden Environments:

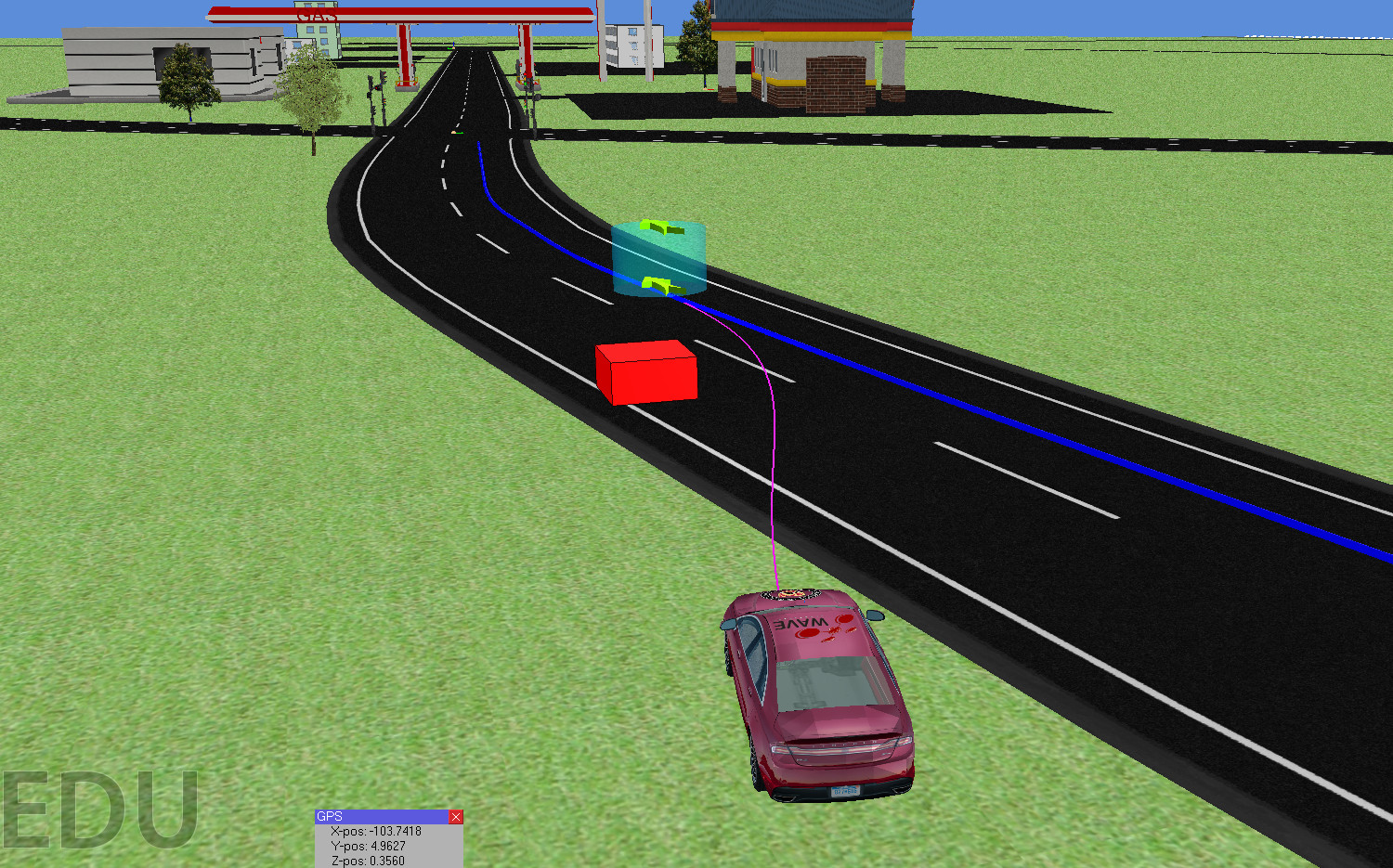

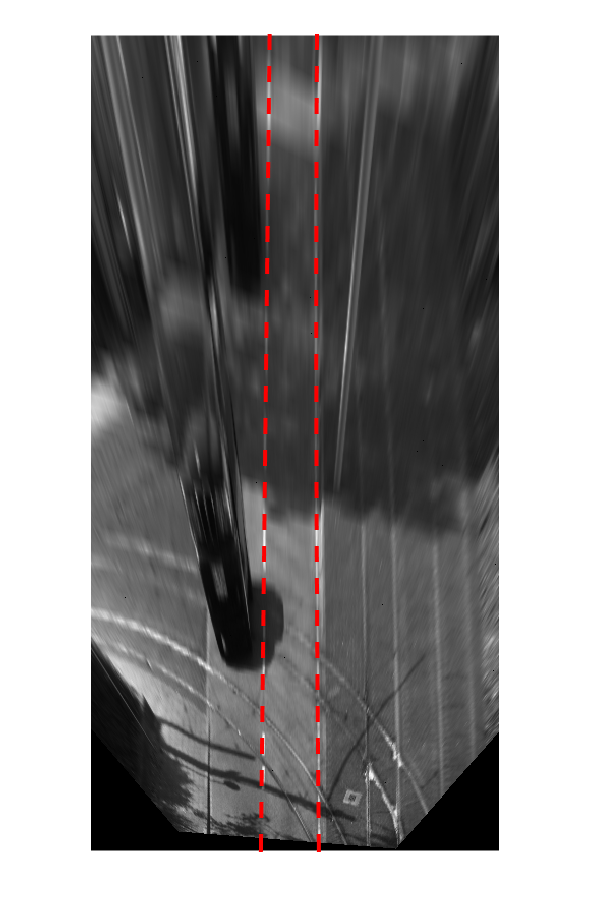

Robot deployment in open snow-covered environments poses challenges to existing vision-based localization and mapping methods. Limited field of view and over-exposure in regions where snow is present leads to difficulty identifying and tracking features in the environment. The wide variation in scene depth and relative visual saliency of points on the horizon results in clustered features with poor depth estimates, as well as the failure of typical keyframe selection metrics to produce reliable bundle adjustment results. In this work, we propose the use of and two extensions to Multi-Camera Parallel Tracking and Mapping (MCPTAM) to improve localization performance in snow-laden environments. First, we define a snow segmentation method and snow-specific image filtering to enhance detectability of local features on the snow surface. Then, we define a feature entropy reduction metric for keyframe selection that leads to reduced map sizes while maintaining localization accuracy. Both refinements are demonstrated on a snow-laden outdoor dataset collected with a wide field-of-view, three camera cluster on a ground rover platform.

References:

A. Das, D. Kumar, A. El Bably, S.L. Waslander. Taming the North: Multi-Camera Parallel Tracking and Mapping in Snow-Laden Environments. In Field and Service Robotics, Toronto, ON, Canada, 2015.